Physicians and their staff complete an average of 39 prior authorization requests per week. They spend roughly 13 hours processing them.¹ When requests get denied, more than 80% of those denials are partially or fully overturned on appeal, meaning the care was appropriate all along.²

That is not a utilization management program working as intended. That is a system generating unnecessary friction, burning clinical resources, and producing decisions it cannot defend.

Auto approvals were supposed to fix this. Route the obvious cases through automatically. Free up clinical reviewers for complex decisions. Cut turnaround times. Reduce provider abrasion.

Most health plans tried some version of this approach. Few got the results they expected.

The Auto Approval Promise vs. the Auto Approval Reality

The math behind auto approvals is straightforward. If a significant share of prior authorization requests are routine, policy aligned, and destined for approval anyway, why route them through manual clinical review?

The problem is execution. In a recent KFF analysis of Medicare Advantage data, denial rates ranged from 4.2% at Elevance Health to 12.8% at UnitedHealth Group.² Those rates might seem low. But when over 80% of denied requests are overturned on appeal, the real story becomes clear: plans are denying care they will ultimately approve, just with extra steps, extra cost, and extra delay.

According to the CAQH Index, only 35% of medical prior authorizations are conducted fully electronically.³ Manual prior authorization transactions cost providers $10.97 each. Fully electronic ones cost $5.79, roughly half. For payers, the gap is even wider: $3.52 per manual transaction versus five cents for a fully electronic one.⁴

Auto approvals should be eating into these costs. For most plans, they are not.

Why Auto Approvals Keep Failing Inside Legacy Workflows

The failure mode looks the same everywhere. A health plan bolts an auto approval layer onto a prior authorization workflow that was designed for manual review. Every request enters the same intake funnel. Data arrives incomplete or inconsistently structured. Clinical documentation lands as bulk attachments, hundreds of pages of chart notes that no automation can parse reliably.

Under those conditions, auto approval rules get conservative fast. Exceptions multiply. Edge cases pile up. The system cannot distinguish between a straightforward imaging request that matches policy criteria and a complex surgical case requiring genuine clinical judgment. So it sends both to manual review, because it cannot trust its own inputs.

The result: auto approvals exist on paper but barely dent the queue. Reviewers still touch most cases. And the 40% of physician practices that now employ staff exclusively to handle prior authorization paperwork¹ see no relief.

Three specific blockers keep this pattern locked in place.

Intake is broken. Requests arrive via fax, portal, phone, and EDI, often missing required fields. When the system cannot confirm a request is complete, it cannot auto approve it. We wrote about this problem in detail in our piece on modernizing UM intake. The front end is where most prior authorization delay actually begins.

Policy logic is fragmented. Medical policies live in PDFs. Clinical criteria are configured in the UM platform. Benefit rules sit in the claims system. No single source of truth exists for “should this request be approved?” When three systems disagree, the default answer is always manual review.

Nobody owns the auto approval rate. UM owns clinical appropriateness. IT owns the platform. Compliance owns regulatory exposure. No single executive is accountable for the percentage of requests that bypass manual review, so nobody optimizes for it.

What Decision Ready Prior Auth Actually Looks Like

The health plans getting auto approvals right are not buying better automation. They are fixing the preconditions that make automation possible.

That means restructuring intake so requests arrive complete and policy aligned before any decision logic runs. It means centralizing medical policy so criteria are applied consistently, not interpreted differently by different reviewers on different shifts. And it means surfacing clinical evidence in context: extracting the three data points that matter for a routine imaging request, rather than dumping a 200 page chart into a reviewer’s queue.

This is the approach behind Mizzeto’s Smart Auth. Instead of asking “can this request be auto approved?” Smart Auth asks “is this request decision ready?” That distinction matters. A decision ready request has complete data, matches a known policy pathway, and meets explicit criteria thresholds, so the system approves it with confidence, not with crossed fingers.

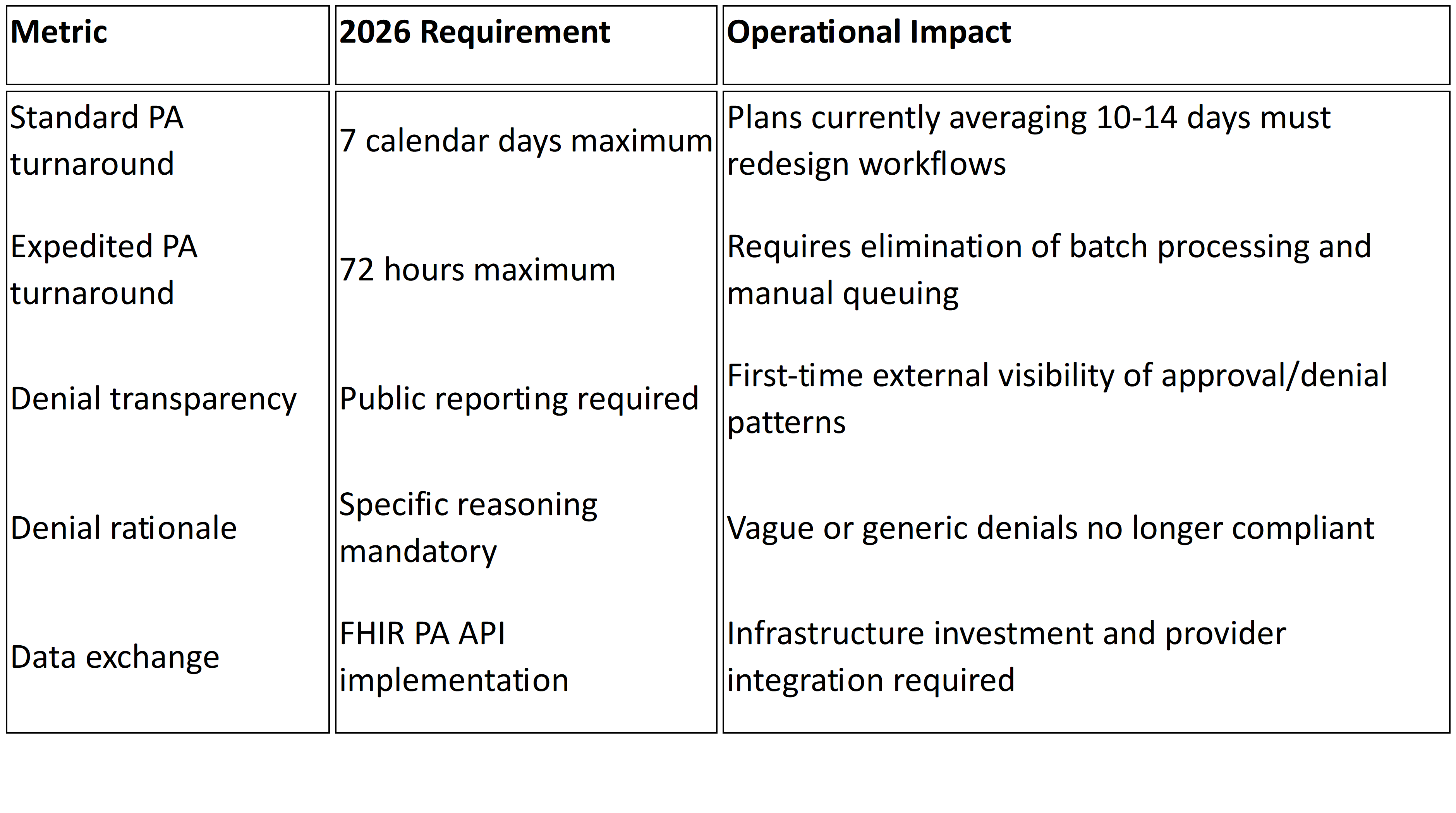

CMS is pushing the entire industry in this direction. Under the Interoperability and Prior Authorization Final Rule (CMS 0057 F), impacted payers must respond to standard prior authorization requests within seven calendar days and expedited requests within 72 hours, effective January 1, 2026.⁵ Plans must also publicly report prior authorization metrics, including approval rates, denial rates, and average turnaround times, beginning March 31, 2026.⁵

Those timelines are not aspirational. They are regulatory. And plans that still route 65% of prior authorization through manual or partially manual channels³ will not meet them by hiring more reviewers. The only realistic path is systematic auto approval of decision ready requests, which means fixing intake, policy logic, and data quality first. We laid out the full compliance timeline in The Countdown to 72/7.

The HL7 Da Vinci FHIR Implementation Guides (CRD, DTR, and PAS) provide the technical scaffolding for this shift, enabling real time coverage requirement discovery and electronic prior authorization submission.⁶ Plans that invest in FHIR based infrastructure now are not just meeting a compliance deadline. They are building the foundation for auto approvals that actually scale.

The Bottom Line

If your auto approval rate is stagnant, the problem is not your approval logic. It is everything upstream: incomplete intake, fragmented policy, and data your system cannot trust.

Start by measuring what percentage of prior authorization requests arrive decision ready. Complete, structured, and policy aligned before they hit clinical review. That number is your ceiling for sustainable auto approvals. Everything you do to raise it directly reduces manual review volume, improves turnaround performance, and positions your plan for CMS 0057 F compliance.

At Mizzeto, Smart Auth was designed to close exactly this gap. Not by approving more requests automatically, but by ensuring the right requests never need manual review in the first place.

If auto approvals exist in your organization but still feel fragile, that fragility is the signal. Let’s talk about it.

Sources

¹ American Medical Association, “2024 AMA Prior Authorization Physician Survey,” 2024. ama-assn.org

² KFF, “Medicare Advantage Insurers Made Nearly 53 Million Prior Authorization Determinations in 2024,” January 2025. kff.org

³ CAQH, “Priority Topics: Prior Authorization,” 2024. caqh.org

⁴ 4sight Health, “The Costly Lever of Prior Authorization” (citing 2023 CAQH Index data), February 2024. 4sighthealth.com

⁵ Centers for Medicare & Medicaid Services, “CMS Interoperability and Prior Authorization Final Rule (CMS 0057 F),” January 17, 2024. cms.gov

⁶ CAQH CORE, “Navigating the CMS 0057 Final Rule: A Guide for Implementing Prior Authorization Requirements,” 2024. caqh.org