Mizzeto's client is looking for an experienced Prior Authorization Manager/SME with 5 years of experience in Healthcare IT with a focus on prior authorization and utilization management. Knowledge of QNXT and regulatory compliance is a plus | Remote (Manila)

Mizzeto's client is looking for an experianced Prior Authorization Manager/SME with 5 years of experience in Healthcare IT with a focus on prior authorization and utilization management. Knowledge of QNXT and regulatory compliance is a plus | Remote (India)

The rapid acceleration of AI in healthcare has created an unprecedented challenge for payers. Many healthcare organizations are uncertain about how to deploy AI technologies effectively, often fearing unintended ripple effects across their ecosystems. Recognizing this, Mizzeto recently collaborated with a Fortune 25 payer to design comprehensive AI data governance frameworks—helping streamline internal systems and guide third-party vendor selection.

This urgency is backed by industry trends. According to a survey by Define Ventures, over 50% of health plan and health system executives identify AI as an immediate priority, and 73% have already established governance committees.

However, many healthcare organizations struggle to establish clear ownership and accountability for their AI initiatives. Think about it, with different departments implementing AI solutions independently and without coordination, organizations are fragmented and leave themselves open to data breaches, compliance risks, and massive regulatory fines.

AI Data Governance in healthcare, at its core, is a structured approach to managing how AI systems interact with sensitive data, ensuring these powerful tools operate within regulatory boundaries while delivering value.

For payers wrestling with multiple AI implementations across claims processing, member services, and provider data management, proper governance provides the guardrails needed to safely deploy AI. Without it, organizations risk not only regulatory exposure but also the potential for PHI data leakage—leading to hefty fines, reputational damage, and a loss of trust that can take years to rebuild.

Healthcare AI Governance can be boiled down into 3 key principles:

For payers, protecting member data isn’t just about ticking compliance boxes—it’s about earning trust, keeping it, and staying ahead of costly breaches. When AI systems handle Protected Health Information (PHI), security needs to be baked into every layer, leaving no room for gaps.

To start, payers can double down on essentials like end-to-end encryption and role-based access controls (RBAC) to keep unauthorized users at bay. But that’s just the foundation. Real-time anomaly detection and automated audit logs are game-changers, flagging suspicious access patterns before they spiral into full-blown breaches. Meanwhile, differential privacy techniques ensure AI models generate valuable insights without ever exposing individual member identities.

Enter risk tiering—a strategy that categorizes data based on its sensitivity and potential fallout if compromised. This laser-focused approach allows payers to channel their security efforts where they’ll have the biggest impact, tightening defenses where it matters most.

On top of that, data minimization strategies work to reduce unnecessary PHI usage, and automated consent management tools put members in the driver’s seat, letting them control how their data is used in AI-powered processes. Without these layers of protection, payers risk not only regulatory crackdowns but also a devastating hit to their reputation—and worse, a loss of member trust they may never recover.

AI should break down barriers to care, not build new ones. Yet, biased datasets can quietly drive inequities in claims processing, prior authorizations, and risk stratification, leaving certain member groups at a disadvantage. To address this, payers must start with diverse, representative datasets and implement bias detection algorithms that monitor outcomes across all demographics. Synthetic data augmentation can fill demographic gaps, while explainable AI (XAI) tools ensure transparency by showing how decisions are made.

But technology alone isn’t enough. AI Ethics Committees should oversee model development to ensure fairness is embedded from day one. Adversarial testing—where diverse teams push AI systems to their limits—can uncover hidden biases before they become systemic issues. By prioritizing equity, payers can transform AI from a potential liability into a force for inclusion, ensuring decisions support all members fairly. This approach doesn’t just reduce compliance risks—it strengthens trust, improves engagement, and reaffirms the commitment to accessible care for everyone.

AI should go beyond automating workflows—it should reshape healthcare by improving outcomes and optimizing costs. To achieve this, payers must integrate real-time clinical data feeds into AI models, ensuring decisions account for current member needs rather than outdated claims data. Furthermore, predictive analytics can identify at-risk members earlier, paving the way for proactive interventions that enhance health and reduce expenses.

Equally important are closed-loop feedback systems, which validate AI recommendations against real-world results, continuously refining accuracy and effectiveness. At the same time, FHIR-based interoperability enables AI to seamlessly access EHR and provider data, offering a more comprehensive view of member health.

To measure the full impact, payers need robust dashboards tracking key metrics such as cost savings, operational efficiency, and member outcomes. When implemented thoughtfully, AI becomes much more than a tool for automation—it transforms into a driver of personalized, smarter, and more transparent care.

An AI Governance Committee is a necessity for payers focused on deploying AI technologies in their organization. As artificial intelligence becomes embedded in critical functions like claims adjudication, prior authorizations, and member engagement, its influence touches nearly every corner of the organization. Without a central body to oversee these efforts, payers risk a patchwork of disconnected AI initiatives, where decisions made in one department can have unintended ripple effects across others. The stakes are high: fragmented implementation doesn’t just open the door to compliance violations—it undermines member trust, operational efficiency, and the very purpose of deploying AI in healthcare.

To be effective, the committee must bring together expertise from across the organization. Compliance officers ensure alignment with HIPAA and other regulations, while IT and data leaders manage technical integration and security. Clinical and operational stakeholders ensure AI supports better member outcomes, and legal advisors address regulatory risks and vendor agreements. This collective expertise serves as a compass, helping payers harness AI’s transformative potential while protecting their broader healthcare ecosystem.

At Mizzeto, we’ve partnered with a Fortune 25 payer to design and implement advanced AI Data Governance frameworks, addressing both internal systems and third-party vendor selection. Throughout this journey, we’ve found that the key to unlocking the full potential of AI lies in three core principles: Protect People, Prioritize Equity, and Promote Health Value. These principles aren’t just aspirational—they’re the bedrock for creating impactful AI solutions while maintaining the trust of your members.

If your organization is looking to harness the power of AI while ensuring safety, compliance, and meaningful results, let’s connect. At Mizzeto, we’re committed to helping payers navigate the complexities of AI with smarter, safer, and more transformative strategies. Reach out today to see how we can support your journey.

Feb 21, 2024 • 2 min read

Your UM director just told you the team averaged 8.5 days on standard prior auths last quarter. You nodded, made a note, moved on. In six months, that number becomes a regulatory violation.

For years, health plans have complained about prior authorization burdens: opaque decisioning, variable outcomes, slow turnaround, escalating provider frustration. Half-hearted automation efforts and hybrid analog-digital processes made the problem more visible without solving it.

CMS is now codifying expectations in a way that forces every payer to face reality: the way prior authorization has been done cannot survive 2026.

The changes coming from the CMS Interoperability and Prior Authorization Final Rule aren't incremental technical requirements. They're operational inflection points that will expose long-standing design flaws in prior authorization and utilization management. Leaders who wait until enforcement deadlines will find themselves reacting. Those who act now can redesign the system itself.

Most plans are asking: "What do we have to do to comply with CMS by 2026?" That's a tactical question.

The strategic question is: How do we redesign our prior authorization engine so it performs at the speed, transparency, and explainability levels CMS expects without burning clinical resources, inflating costs, or fragmenting operations?

Checking boxes gets you compliant. Redesigning the system gets you competitive.

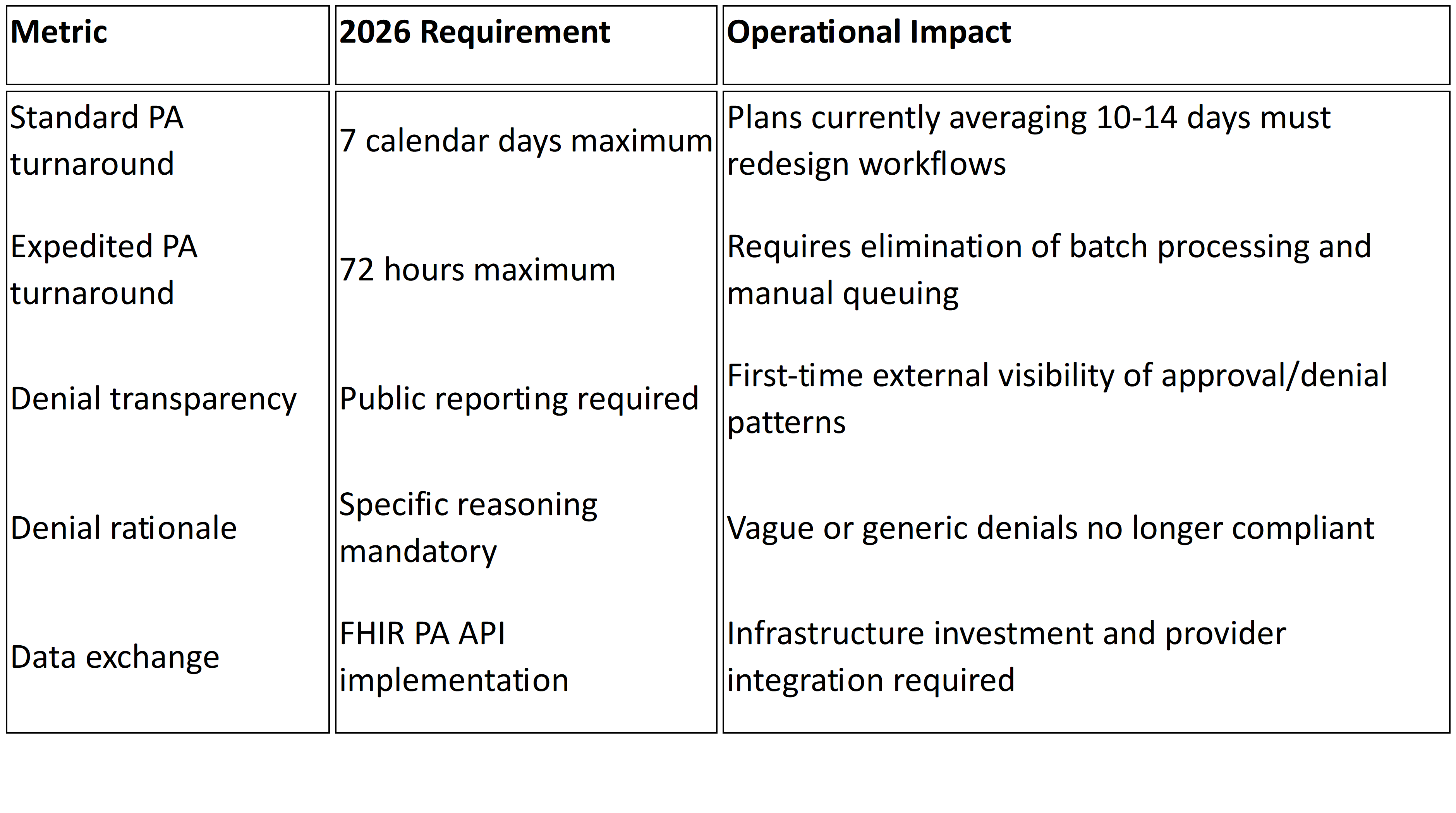

Beginning January 1, 2026, CMS moves prior authorization from operational best practice to regulatory mandate.

Under the Interoperability and Prior Authorization Final Rule (CMS-0057-F), impacted payers including Medicare Advantage, Medicaid managed care organizations, CHIP, and certain Qualified Health Plans must comply with several non-negotiable requirements1:

72-hour turnaround for expedited prior authorization requests

Seven calendar days for standard requests

Specific, actionable denial reasons included with every adverse determination

Public reporting of prior authorization metrics including approval rates, denial rates, and average processing times beginning March 31, 2026.2

FHIR-based APIs to support electronic prior authorization workflows and expanded data access

Here's what this means in practice:

These aren't tweaks to existing workflows. They introduce enforceable timelines, public transparency, and standardized data exchange that most legacy UM environments were never built to support.

The 2026 requirements don't create new operational weaknesses. They expose existing ones.

You Can't Hire Your Way to 72-Hour Compliance

If your prior authorization process depends on manual triage, inconsistent intake validation, or batch review cycles, meeting 72-hourand seven-day mandates becomes structurally challenging. Missed SLAs are no longer internal performance issues. They become regulatory violations.

The constraint is workflow design, not headcount. Adding clinical reviewers may temporarily reduce queue depth, but it doesn't eliminate intake latency, fragmented decision logic, or rework loops that consume days before cases reach clinical evaluation.

Your Denial Rates Are About to Become Public

For the first time, denial rates and processing times will be publicly reported beginning March 31, 2026.2

Plans with high denial rates, particularly those with elevated appeal overturn percentages, will face scrutiny from regulators, providers, and beneficiaries. Appeal overturn rates that were previously internal quality metrics become public signals about determination consistency.

Denials frequently reversed on appeal start looking less like utilization management discipline and more like systematic dysfunction.

Unstructured Intake Creates SLA Risk

Any workflow relying on fax, email attachments, or unstructured documentation creates intake uncertainty. Under 2026 mandates, that uncertainty translates directly into SLA exposure. What was operational inconvenience becomes regulatory vulnerability.

When requests arrive incomplete or in unstructured formats, the clock has already started but clinical review cannot. Days get consumed in follow-up and clarification before actual determination work begins.

Policy Fragmentation Becomes Audit Risk

Medical policies in PDFs. Coverage criteria configured separately in UM systems. Benefit rules embedded in claims engines.

When these layers diverge, denial rationale becomes inconsistent. Inconsistent rationale fuels appeals. Appeal patterns become public metrics tracked by CMS and visible to your provider network.

The 2026 rule requires "a specific reason for adenial"1 in a manner that allows providers to understand what additional information or clinical criteria would result in approval. Fragmented policy governance makes this level of specificity difficult to maintain consistently across thousands of determinations.

API Implementation Without Operational Alignment Fails

FHIR-based Prior Authorization APIs are mandated under the final rule1, but successful implementation requires more than technical connectivity.

These APIs demand structured, standardized data; clear mapping of coverage rules; real-time status tracking; and determination traceability. Treating API implementation as a technical bolt-on without aligning internal policy logic and workflow orchestration creates compliance on paper but operational brittleness in practice.

Reporting Infrastructure Will Strain Multiple Teams

Public reporting requires consolidated, accurate, reconcilable data. The rule requires payers to publicly report metrics including prior authorization decisions, denial reasons, and turnaround times2.

Most plans currently track these metrics across multiple systems: intake portals, UM platforms, claims engines. Without centralized reporting architecture, compliance becomes a manual reconciliation exercise rather than an automated output.

What Forward-Thinking Plans Are Doing Differently

The plans that will meet and leverage the 2026 expectations approach the problem differently.

They Treat Prior Authorization as a System, Not a Function

Rather than thinking in terms of "PA teams" or "PA tech stacks," they define a unified decision pipeline: intake →policy → decision → evidence → reporting. Every component must be architected for speed, traceability, and defensibility.

They Engineer Intake for Decision Readiness

Systems that treat intake as a validation and structuring event, not just data capture, dramatically reduce downstream review time. When requests arrive complete and structured, decisions get smarter and faster.

If a significant portion of requests require follow-up for missing clinical documentation, days burn before clinical review even starts. Fixing intake fixes throughput.

They Govern Policy and Logic Centrally

If policy resides in PDFs, disparate tools, and tribal knowledge, automation fails. Aligning policy logic, configuration, and deployment is the prerequisite for defensible, explainable decisions that meet CMS transparency expectations.

Centralized policy governance ensures reviewers apply consistent standards across all determinations, directly impacting appeal rates and public reporting metrics.

They Accelerate FHIR API Adoption Strategically

Forward-leaning plans are adopting FHIR Prior Authorization APIs now, enabling electronic request and response, reducing provider friction, and establishing a foundation for real-time decisioning rather than batch processing.

This isn't just compliance theater. It's infrastructure for the next decade of utilization management.

Most organizations' instinctive reaction to tighter SLAs is staffing expansion. Consider what that investment looks like:

Clinical hiring: Expanding nurse reviewer teams to handle faster turnaround requirements

Reporting resources: Staff to reconcile metrics across systems for public reporting compliance

API implementation: Technical infrastructure for FHIRPA API deployment and provider integration

Policy governance: Often unfunded, leading to continued fragmentation and appeal exposure

The alternative is investing in redesigning the decision pipeline itself. Structured intake, centralized policy logic, and automated workflow orchestration reduce review burden while improving consistency. The ROI isn't just compliance. It's operational leverage.

If you're treating this as a compliance checklist, you're already behind. This is a fundamental redesign of how utilization management operates.

By Q2 2025: Audit your current PA workflow end-to-end. Identify where time gets consumed: intake validation, clinical review queues, policy lookup, documentation rework, peer-to-peer scheduling. Measure your actual turnaround distribution, not averages.

By Q3 2025: Centralize policy governance. Map coverage criteria to decision logic. Ensure clinical reviewers are applying consistent standards that can withstand public scrutiny and audit review.

By Q4 2025: Implement structured intake that validates completeness before requests enter clinical queues. Stand up reporting infrastructure that consolidates metrics in real time.

By Q1 2026: Conduct dry runs of public reporting. Simulate 72-hour expedited workflows under peak volume. Validate FHIR API functionality with key provider groups.

The plans that redesign now won't just comply. They'll operate with structural advantage.

We built Smart Auth after years of working inside health plan operations, seeing firsthand where prior authorization workflows breakdown. It's designed to make prior authorization decision-ready from intake through policy application and final determination.

Smart Auth structures data at intake, aligns policy logic centrally, and supports the traceability required for timely decisions and transparent reporting. It enables defensible, explainable determinations at the speed CMS expects without requiring massive clinical hiring or fragmented point solutions.

In 2026, prior authorization performance won't be judged internally. It will be measured, reported, and compared publicly. The question isn't whether to redesign. It's whether you start now or spend 2026 firefighting compliance gaps while your metrics become part of the public record.

Jan 30, 2024 • 6 min read

Physicians and their staff complete an average of 39 prior authorization requests per week. They spend roughly 13 hours processing them.¹ When requests get denied, more than 80% of those denials are partially or fully overturned on appeal, meaning the care was appropriate all along.²

That is not a utilization management program working as intended. That is a system generating unnecessary friction, burning clinical resources, and producing decisions it cannot defend.

Auto approvals were supposed to fix this. Route the obvious cases through automatically. Free up clinical reviewers for complex decisions. Cut turnaround times. Reduce provider abrasion.

Most health plans tried some version of this approach. Few got the results they expected.

The math behind auto approvals is straightforward. If a significant share of prior authorization requests are routine, policy aligned, and destined for approval anyway, why route them through manual clinical review?

The problem is execution. In a recent KFF analysis of Medicare Advantage data, denial rates ranged from 4.2% at Elevance Health to 12.8% at UnitedHealth Group.² Those rates might seem low. But when over 80% of denied requests are overturned on appeal, the real story becomes clear: plans are denying care they will ultimately approve, just with extra steps, extra cost, and extra delay.

According to the CAQH Index, only 35% of medical prior authorizations are conducted fully electronically.³ Manual prior authorization transactions cost providers $10.97 each. Fully electronic ones cost $5.79, roughly half. For payers, the gap is even wider: $3.52 per manual transaction versus five cents for a fully electronic one.⁴

Auto approvals should be eating into these costs. For most plans, they are not.

The failure mode looks the same everywhere. A health plan bolts an auto approval layer onto a prior authorization workflow that was designed for manual review. Every request enters the same intake funnel. Data arrives incomplete or inconsistently structured. Clinical documentation lands as bulk attachments, hundreds of pages of chart notes that no automation can parse reliably.

Under those conditions, auto approval rules get conservative fast. Exceptions multiply. Edge cases pile up. The system cannot distinguish between a straightforward imaging request that matches policy criteria and a complex surgical case requiring genuine clinical judgment. So it sends both to manual review, because it cannot trust its own inputs.

The result: auto approvals exist on paper but barely dent the queue. Reviewers still touch most cases. And the 40% of physician practices that now employ staff exclusively to handle prior authorization paperwork¹ see no relief.

Three specific blockers keep this pattern locked in place.

Intake is broken. Requests arrive via fax, portal, phone, and EDI, often missing required fields. When the system cannot confirm a request is complete, it cannot auto approve it. We wrote about this problem in detail in our piece on modernizing UM intake. The front end is where most prior authorization delay actually begins.

Policy logic is fragmented. Medical policies live in PDFs. Clinical criteria are configured in the UM platform. Benefit rules sit in the claims system. No single source of truth exists for “should this request be approved?” When three systems disagree, the default answer is always manual review.

Nobody owns the auto approval rate. UM owns clinical appropriateness. IT owns the platform. Compliance owns regulatory exposure. No single executive is accountable for the percentage of requests that bypass manual review, so nobody optimizes for it.

The health plans getting auto approvals right are not buying better automation. They are fixing the preconditions that make automation possible.

That means restructuring intake so requests arrive complete and policy aligned before any decision logic runs. It means centralizing medical policy so criteria are applied consistently, not interpreted differently by different reviewers on different shifts. And it means surfacing clinical evidence in context: extracting the three data points that matter for a routine imaging request, rather than dumping a 200 page chart into a reviewer’s queue.

This is the approach behind Mizzeto’s Smart Auth. Instead of asking “can this request be auto approved?” Smart Auth asks “is this request decision ready?” That distinction matters. A decision ready request has complete data, matches a known policy pathway, and meets explicit criteria thresholds, so the system approves it with confidence, not with crossed fingers.

CMS is pushing the entire industry in this direction. Under the Interoperability and Prior Authorization Final Rule (CMS 0057 F), impacted payers must respond to standard prior authorization requests within seven calendar days and expedited requests within 72 hours, effective January 1, 2026.⁵ Plans must also publicly report prior authorization metrics, including approval rates, denial rates, and average turnaround times, beginning March 31, 2026.⁵

Those timelines are not aspirational. They are regulatory. And plans that still route 65% of prior authorization through manual or partially manual channels³ will not meet them by hiring more reviewers. The only realistic path is systematic auto approval of decision ready requests, which means fixing intake, policy logic, and data quality first. We laid out the full compliance timeline in The Countdown to 72/7.

The HL7 Da Vinci FHIR Implementation Guides (CRD, DTR, and PAS) provide the technical scaffolding for this shift, enabling real time coverage requirement discovery and electronic prior authorization submission.⁶ Plans that invest in FHIR based infrastructure now are not just meeting a compliance deadline. They are building the foundation for auto approvals that actually scale.

If your auto approval rate is stagnant, the problem is not your approval logic. It is everything upstream: incomplete intake, fragmented policy, and data your system cannot trust.

Start by measuring what percentage of prior authorization requests arrive decision ready. Complete, structured, and policy aligned before they hit clinical review. That number is your ceiling for sustainable auto approvals. Everything you do to raise it directly reduces manual review volume, improves turnaround performance, and positions your plan for CMS 0057 F compliance.

At Mizzeto, Smart Auth was designed to close exactly this gap. Not by approving more requests automatically, but by ensuring the right requests never need manual review in the first place.

If auto approvals exist in your organization but still feel fragile, that fragility is the signal. Let’s talk about it.

¹ American Medical Association, “2024 AMA Prior Authorization Physician Survey,” 2024. ama-assn.org

² KFF, “Medicare Advantage Insurers Made Nearly 53 Million Prior Authorization Determinations in 2024,” January 2025. kff.org

³ CAQH, “Priority Topics: Prior Authorization,” 2024. caqh.org

⁴ 4sight Health, “The Costly Lever of Prior Authorization” (citing 2023 CAQH Index data), February 2024. 4sighthealth.com

⁵ Centers for Medicare & Medicaid Services, “CMS Interoperability and Prior Authorization Final Rule (CMS 0057 F),” January 17, 2024. cms.gov

⁶ CAQH CORE, “Navigating the CMS 0057 Final Rule: A Guide for Implementing Prior Authorization Requirements,” 2024. caqh.org

Jan 30, 2024 • 6 min read

For years, prior authorization improvement efforts have centered on one metric: speed. Faster turnaround times. Shorter queues. Quicker determinations. When backlogs grow, the instinctive response is to push harder, add staff, tighten SLAs, accelerate intake, automate submission.

And yet, despite sustained investment, many health plans find themselves in a familiar place. Requests move faster into the system, but decisions do not come out any cleaner. Appeals rise. Clinical teams feel busier, not better supported. Regulatory scrutiny intensifies.

The problem isn’t that health plans aren’t moving quickly enough. It’s that they’re optimizing for the wrong outcome.

The critical question facing payer executives today is not how to make prior authorization faster. It is how to make authorization outcomes decision-ready.

In theory, prior authorization is a linear process. A request arrives. Medical necessity is assessed. A decision is rendered and communicated. In practice, speed at the front of the process often exposes fragility downstream. Requests arrive sooner, but incomplete. Data flows faster, but inconsistently. Clinical documentation is attached, but not usable.

What feels like progress—shorter intake cycles, higher submission volumes—often masks a deeper inefficiency: decisions still require the same amount of searching, clarifying, and rework. Sometimes more.

When speed becomes the primary goal, organizations optimize how fast work enters the system, not how effectively it can be resolved.

In our experience working with payer organizations, most delays in prior authorization do not occur because reviewers are slow. They occur because reviewers are forced to reconstruct meaning from poorly prepared inputs.

Requests arrive with missing or mis-keyed information. Clinical notes are uploaded as hundreds of unstructured pages. Policy criteria are technically met, but not clearly demonstrated. Nurses and physicians spend their time hunting for evidence rather than applying judgment.

A routine imaging authorization, for example, may arrive with a 200-page chart attached—office notes, lab results, historical encounters spanning years. The information needed to approve the request may exist somewhere in the record, but reviewers must sift through dozens of irrelevant pages to find it. The delay isn’t clinical complexity. It’s the effort required to locate and validate the right signal inside too much noise. That friction compounds downstream, creating a clinical review bottleneck where highly trained staff spend their time searching for context instead of making decisions.

Accelerating intake without addressing these issues simply increases the volume of work that is not ready to be decided. Each incomplete request introduces pauses, clarifications, and handoffs. What should have been a single pass through the system becomes multiple touches across multiple teams.

From the outside, this looks like insufficient capacity. From the inside, it is capacity being quietly consumed by avoidable friction. Across the U.S. health care system, administrative burden tied to prior authorization contributes to multi-billion dollar annual costs, reflecting how inefficient processes absorb payer and provider resources long before clinical review begins.1

This is where many modernization efforts stall. Automation accelerates submission and routing, but PA automation alone does not change the quality of what enters the system. Providers submit more requests because it is easier to do so. Intake teams process them faster. Clinical reviewers inherit the same defects at higher velocity. Speed amplifies whatever already exists—and when work is not decision-ready, it multiplies rework rather than reducing it

Organizations that consistently control prior authorization performance focus less on turnaround time and more on decision quality at entry.

They ensure requests arrive complete and structured, reducing manual re-keying and downstream correction. Reflecting this shift, a significant proportion of health plans have already implemented electronic prior authorization systems, signalling both the complexity of modern workflows and the growing emphasis on reducing manual friction.2 They normalize data so policy criteria can be evaluated consistently. They surface the specific clinical evidence needed for a decision, rather than forcing reviewers to search entire records. And they treat policy logic as a shared, governed asset—not something interpreted differently by each reviewer.

As a result, their systems move work through once. Appeals decrease because rationales are timely and clear. Clinical teams spend their time applying judgment instead of assembling context. Speed improves, but as a consequence of better design, not as the primary objective.

The shift is subtle but decisive. The goal is no longer faster authorization. It is fewer touches per authorization.

Prior authorization sits at the intersection of cost control, access, and regulatory oversight. As CMS and other regulators increasingly expect decisions to be explainable, not just defensible—as reinforced by the CMS prior authorization rule—the cost of prioritizing speed over clarity rises. Under the CMS Interoperability and Prior Authorization final rule (CMS-0057-F), impacted payers must provide prior authorization decisions within 72 hours for urgent requests and seven calendar days for standard requests, and include specific reasons for denials to improve transparency and explainability of decisions.3 The rule shifts expectations away from throughput alone and toward consistency, traceability, and timely rationale.

Systems that rely on heroics and overtime may hit SLAs in the short term, but they accumulate risk. Systems designed for decision readiness scale more predictably and withstand scrutiny more effectively.

What executives experience as utilization management pressure is rarely a failure of effort. It is a signal that the system has been optimized for motion, not resolution.

At Mizzeto, we work with payer organizations to address this exact gap—connecting intake, clinical review, and policy logic so prior authorization decisions can be made efficiently, consistently, and explainably. This is the design philosophy behind Smart Auth, our prior authorization platform—ensuring requests arrive decision-ready, with structured intake, reduced rework, and clinical evidence surfaced in context rather than buried in charts.

Because in modern utilization management, sustained performance isn’t about pushing teams harder. It’s about removing the friction that never needed to be there in the first place.

If your team is hitting SLAs but appeals keep climbing, let’s talk.

Jan 30, 2024 • 6 min read

Prior authorization backlogs are often described as volume problems. They show up as growing queues on operational dashboards, rising turnaround times, and escalating pressure on clinical teams. The explanation, almost reflexively, is that demand arrived faster than expected - too many requests, too little time.

But for most health plans, that explanation doesn’t hold up under scrutiny. Prior authorization backlogs are rarely caused by volume alone. They are caused by friction inside the authorization process itself. Friction that is well known, consistently repeated, and largely predictable.1

The real question isn’t why prior authorization volume increased. It’s why so many authorization requests cannot move cleanly from intake to decision. In theory, prior auth is straightforward: receive a request, assess medical necessity, render a decision, notify the provider. In practice, the work looks very different.

Requests arrive incomplete. Key fields are missing or entered incorrectly. Clinical documentation is attached as hundreds of unstructured pages. Nurses and physicians spend their time searching for the few sentences that actually matter. Decisions stall because they are clinically complex, but because the information required to make them is fragmented, inconsistent, or buried.

Backlogs form not at the moment of clinical judgment, but long before that judgment can even begin.

Most prior authorization backlogs are built upstream, during intake. Provider offices submit requests with missing clinical details, outdated codes, or attachments that don’t align to policy requirements.2 Internal coordinators re-key information from faxes, portals, or PDFs, introducing small errors that force rework later. Many prior authorization delays stem from manual processes and technology gaps, leading to inefficiency and error-prone workflows.3 Each defect is minor on its own, but together they create a steady drag on throughput.

Downstream, clinical reviewers inherit this friction. Nurses sift through large medical records to reconstruct timelines.4 Physicians pause decisions while clarifications are requested. Requests bounce between teams. Appeals increase, not always because the decision was wrong, but because the rationale was delayed or unclear. The backlog grows quietly, one stalled case at a time.

From a distance, all of this looks like a surge. Executives see more cases aging past SLA. Leaders see staff working harder without visible progress. The conclusion is that volume must be overwhelming capacity. In reality, capacity is being consumed by rework.

Every incomplete intake, every mis-keyed field, every unclear policy reference turns a single request into multiple touches. What should have been a linear process becomes a loop. The backlog isn’t driven by how many requests arrived, it’s driven by how many times each request must be handled before it can be resolved. That multiplier effect is predictable. And yet, it’s rarely modeled.

Automation is often applied at the intake layer, with the promise of speed. And it does make submission faster. Providers submit more requests. Intake teams process them more quickly. But if the underlying issues remain - missing information, poor data normalization, unstructured records, automation simply accelerates the arrival of flawed work.

Clinical teams feel this immediately. More cases arrive faster, but with the same defects. Reviewers spend less time waiting and more time searching, clarifying, and escalating.5

This is why many health plans modernize prior auth technology and still experience worsening backlogs. Automation has increased flow, but not decision readiness.

Plans that control prior authorization backlogs focus less on speed and more on decision quality at intake.

They invest in ensuring requests arrive complete, structured, and aligned to policy requirements. They reduce manual keying wherever possible. They use technology to surface the right clinical evidence, rather than flooding reviewers with entire charts. And they treat policy interpretation as something that must scale consistently across reviewers, not as tribal knowledge.

Most importantly, they measure where requests stall and why. Backlogs are treated as signals: indicators of where information breaks down, where policy is unclear, or where rework is being introduced.

As a result, their queues are smaller and not because demand disappeared, but because requests move through the system once, instead of three or four times.

When prior authorization backlogs are framed as staffing or volume problems, they persist. When they are understood as information and workflow problems, they become solvable.

Prior auth backlogs don’t originate in clinical decision-making. They originate in how information enters the system and how much effort it takes to make that information usable.

What executives experience as UM backlogs are almost always prior authorization system outcomes. They reflect whether a health plan has designed prior authorization to support clean, defensible decisions at scale.

At Mizzeto, we work with payer organizations to address this exact gap. Connecting intake, clinical review, and policy logic so prior authorization decisions can be made efficiently, consistently, and explainably. Through Smart Auth, we help plans ensure requests arrive decision-ready: structured intake, reduced manual rework, and clinical evidence surfaced in context rather than buried in charts. Because in modern utilization management, sustained performance isn’t about pushing teams harder. It’s about removing the friction that never needed to be there in the first place.

Jan 30, 2024 • 6 min read