Every few years, health plan executives face the same question: stick with their core claims platform they know, or invest in the upgrade that promises better performance, new compliance capabilities, and future-proof scalability.

On paper, upgrading a core claims systems seem straightforward. In practice, it is anything but. Behind every upgrade lies a tangle of operational disruption, hidden costs, and strategic decisions about whether incremental improvements are enough — or whether the organization needs a bigger rethink of its core system.

For CEOs, the issue is no longer whether their core systems can process claims reliably — it can. The real question is how to navigate the complexity of implementation and upgrades in a way that preserves agility, controls cost and positions the plan for a fast-changing regulatory environment.

The Implementation Challenge

Core claims systems are designed as a flexible, rules-driven platform that can accommodate the diverse needs of Medicaid, Medicare Advantage, and commercial lines of business. That flexibility is its strength — and its weakness.

Each new implementation or upgrade requires an enormous degree of configuration, testing, and integration. Payers must align their latest version of their claims systems with legacy systems (eligibility, prior authorization, provider directories, member portals), and each integration point introduces risk. A single misalignment in provider contracting rules or claims adjudication logic can cascade into payment errors, member dissatisfaction, or regulatory exposure.

Moreover, because most core systems are often deeply customized during initial deployment, upgrades rarely feel like “plug and play.” They often require re-engineering workflows, re-validating interfaces, and retraining staff. What should be a version change can feel like a mini-implementation.

The Upgrade Bottleneck

Most payer CEOs hear the same refrain from their operations and IT leaders: “The upgrade will pay for itself in efficiency.” In theory, yes. New versions introduce better automation, compliance updates, and reporting tools. However, large-scale payer platforms were not designed in an era of real-time interoperability. Many of their core workflows still rely on batch processing, extensive customization, and legacy integration patterns. As a result, upgrades are rarely simple. Migrations can stretch across months, often introducing new bugs or defects that disrupt daily operations. The bottleneck is in execution.1

- Downtime risk: Even short disruptions in claims processing create reputational and financial exposure. A day of delays can ripple into member grievances and provider abrasion.

- Testing burden: Because payers often maintain highly customized rule sets, regression testing is complex and resource-intensive. IT teams must simulate thousands of claims scenarios before a go-live.

- Cost creep: What starts as a “standard upgrade” can balloon into a multi-million-dollar initiative once consulting, testing, downtime mitigation, and staff retraining are factored in.

For CEOs, the bottleneck isn’t simply technical. It’s strategic: How many resources should be spent on making the old platform incrementally better, versus rethinking whether a next-generation solution is needed?

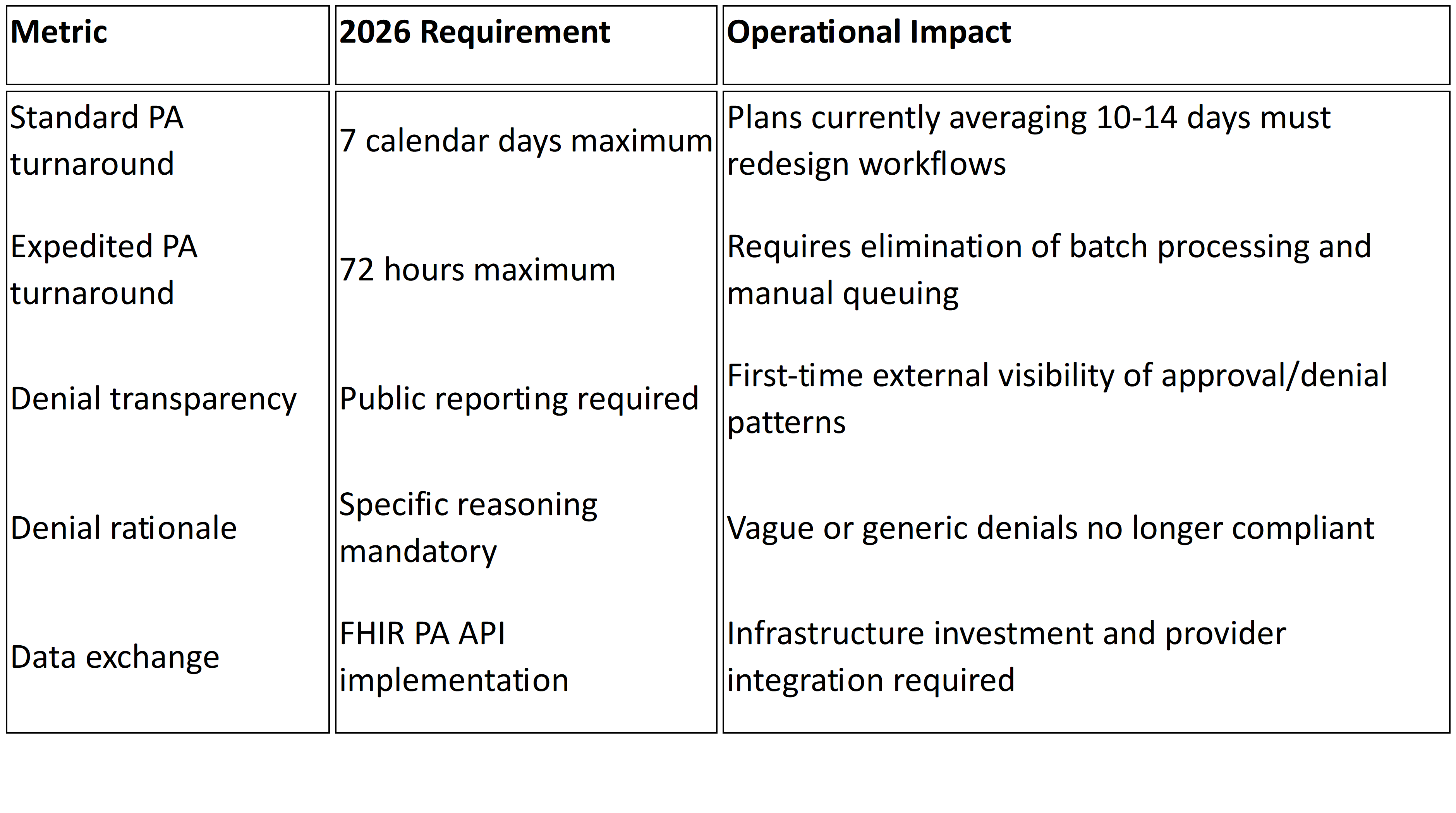

Regulatory Pressures

Upgrading a core claims system cannot be deferred indefinitely. Many core claims, enrollment, and utilization management systems remain siloed, limiting real-time insights and slowing operations. Regulatory requirements—like CMS’s interoperability and prior authorization rules (CMS-0057-F), network adequacy reporting, and transparency mandates—further raise the stakes, demanding standardized APIs, automated reporting, and faster turnaround.2 Without modernization, payers risk inefficiency, compliance gaps, and the inability to respond rapidly to operational pressures.

The compliance bar is also moving faster than typical upgrade cycles. A system refreshed every three to five years may not keep pace with annual regulatory changes. This creates a structural tension: the need for compliance agility versus the slow, heavy cadence of traditional upgrades.

The Organizational Strain

When implementations succeed, they can streamline claims workflows significantly. But these gains are not automatic, upgrades also test organizational resilience. Claims staff must learn new interfaces. Clinical teams relying on UM and PA modules must adapt workflows. The transition phase often requires running parallel systems, and custom integration work with provider portals, EHRs, and third-party vendors. Finance leaders face budget overruns. And executives must explain to boards why a platform upgrade is consuming so much capital and time.

For many plans, this strain is amplified by workforce realities. IT and operations teams are already lean. Pulling them into months of testing and implementation work diverts attention from member experience, provider relations, and innovation. The opportunity cost is real.

Making the Strategic Choice

Here is where CEOs must step back and ask the bigger question: Is the goal to modernize your core claims system, or to modernize the enterprise?

- Incremental approach: Continue upgrading, absorb the disruption, and bolt on compliance tools as needed. This preserves continuity but risks technical debt and operational fatigue.

- Transformational approach: Use the upgrade decision as a pivot point to evaluate alternative platforms, cloud-native solutions, or modular architectures that align with where the industry is headed.

Neither path is inherently right or wrong. What matters is clarity: knowing the true costs of incrementalism versus transformation and aligning the decision with the payer’s broader strategy.

Toward a Smarter Upgrade Strategy

So how should CEOs approach the next round of implementation or upgrade? A few guiding principles stand out:

- Treat upgrades as enterprise projects, not IT projects. The impact crosses claims, UM, provider relations, finance, and compliance. Governance must reflect that.

- Model total cost of ownership. Factor in not just licensing and consulting, but also downtime, retraining, and opportunity cost.

- Benchmark against regulatory timelines. Ask whether the upgrade cycle will keep pace with CMS mandates, or whether external modules will still be needed.

- Invest in interoperability first. Whether sticking with your current claims system or moving beyond it, APIs, FHIR compliance, and real-time data exchange should be the non-negotiable foundation.

- Build for flexibility. The real risk is not just being behind today but being unable to adapt tomorrow.

The Bottom Line

For payer CEOs, the question is not whether the platform can do the job. It can — and does, for millions of members nationwide. The real issue is whether the cost, complexity, and cadence of implementation and upgrades align with the demands of a regulatory environment that moves faster than traditional IT cycles.

Compliance is non-negotiable. Execution at speed and scale is existential.

At Mizzeto, we help health plans navigate the challenges of implementing and upgrading their core claims systems, turning complex technology transitions into smooth, high-impact changes. Our services streamline operations, modernize claims, and promote connectivity between disparate systems. By breaking down silos, automating data exchange, and delivering real-time operational insights, we help plans turn upgrades into a foundation for resilience, compliance, and measurable ROI.

Upgrading a claims system is more than a technical project—it’s a test of whether a health plan can translate technology into agility, compliance, and measurable impact.